From Audio to Score: Automatic Music Transcription Technology

Deep dive into Automatic Music Transcription (AMT) - the process of converting acoustic signals into symbolic music notation. Explore the challenges of polyphonic transcription, the annotation bottleneck, and state-of-the-art deep learning solutions.

The Musical Equivalent of Speech Recognition

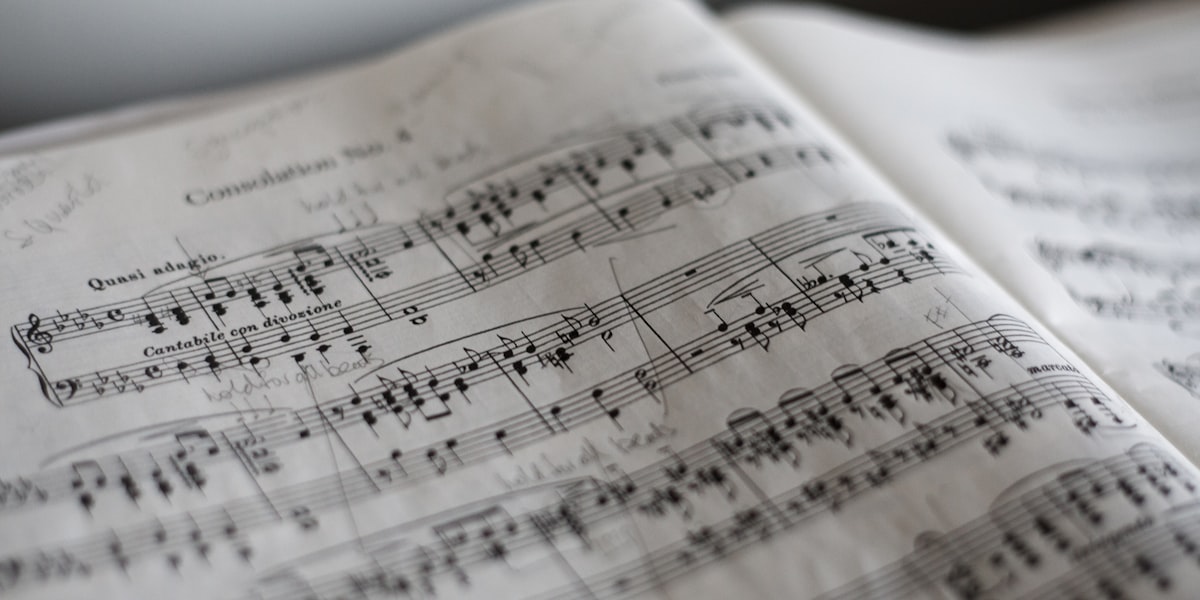

Automatic Music Transcription (AMT) is the process of converting an acoustic music signal into a symbolic representation, such as a piano-roll or a formal musical score. It is a task of immense complexity, widely considered the musical equivalent of Automatic Speech Recognition (ASR).

The Fundamental Link

A successful AMT system is a cornerstone of any music AI copilot, providing the fundamental link between the continuous world of audio signals and the discrete, structured world of symbolic music processing. It enables AI systems to "understand" music at a structural level, not just as raw audio.

The Challenge of Polyphonic Transcription

While transcribing a single melodic line (monophonic music) is a relatively solved problem, transcribing polyphonic music—where multiple notes from multiple instruments sound simultaneously—presents profound challenges:

A musical sound is composed of a fundamental frequency and a series of overtones, or harmonics. In polyphonic music, the harmonics of different notes frequently overlap in the frequency spectrum.

Example: In a simple C major chord (C-E-G), a significant percentage of each note's harmonics are obscured by the harmonics of the other notes, making it an extremely under-determined problem to infer which fundamental frequencies are present.

Musical voices are not statistically independent. Musicians coordinate their timing precisely, governed by the music's metrical structure. This temporal correlation violates the common signal processing assumption of source independence, which would otherwise simplify the separation task.

The single greatest impediment to progress in AMT is the scarcity of large-scale, high-quality, ground-truth datasets. Manually transcribing polyphonic music is an incredibly time-consuming and expert-driven task.

Impact: This "annotation bottleneck" has severely limited the application of powerful supervised deep learning techniques that thrive on massive datasets. The faster progress seen in piano transcription is a direct result of automatic data collection from instruments like the Yamaha Disklavier.

Hierarchical Levels of Transcription

AMT research has progressed through a hierarchy of increasing abstraction, mirroring the structure of music itself:

Frame-Level Transcription

Multi-Pitch Estimation: The most basic level, estimating which pitches are active within very short time frames (e.g., 10 ms) of the audio.

Early approaches used signal processing and probabilistic models, while later methods incorporated NMF and neural networks.

Note-Level Transcription

Note Tracking: Processes frame-level output to form discrete musical notes with defined pitch, onset time, and offset time.

Often treated as post-processing using Hidden Markov Models (HMMs) to connect pitch activations over time.

Stream-Level Transcription

Multi-Pitch Streaming: Groups transcribed notes into distinct musical voices or streams, often corresponding to single instruments.

Requires understanding of voice leading and musical structure.

Notation-Level Transcription

The Ultimate Goal: Converting audio into a human-readable musical score.

Requires deep understanding of harmony (pitch spelling), rhythm (quantization), and instrumentation (staff assignment). Remains a significant open research challenge.

Algorithmic Approaches: NMF and Deep Learning

NMF models a spectrogram as the product of:

- Dictionary of spectral templates (e.g., C4 on piano)

- Matrix of temporal activations

Advantages: Linear, interpretable, adaptable to new instruments with small datasets. Remains highly competitive.

Neural networks learn complex, non-linear functions:

- CNNs for spectral pattern learning

- RNNs/LSTMs for temporal dependencies

State-of-the-art: Achieved best results, especially in piano transcription with sufficient training data.

Google's Onset and Frames: A Landmark Architecture

Google Brain's "Onset and Frames" network explicitly recognizes the multi-task nature of transcription. It revolutionized piano transcription by dividing the problem:

Onset Detector

Detects the precise moment when notes begin. Specialized for identifying sharp, transient attacks.

Frame Activation

Estimates which pitches are active in each frame. Handles sustained portions of notes.

Key Innovation

This division of labor improves learning, as the sharp, transient nature of an onset has different temporal dynamics than the sustained portion of a note. The model combines both outputs for superior transcription accuracy.

The MAESTRO Dataset: Enabling Piano Transcription

Dataset Statistics

- • 200+ hours of performances

- • International Piano-e-Competition

- • Virtuosic classical repertoire

- • Yamaha Disklavier recordings

Perfect Synchronization

- • High-resolution audio

- • Precise MIDI data

- • Key velocity information

- • Pedal positions captured

MAESTRO's precision makes it the gold standard for training and evaluating high-performance automatic piano transcription models and for generating realistic, expressive piano music.

Automatic Lyric Transcription: The Vocal Challenge

Automatic Lyric Transcription (ALT) applies ASR technology to extract lyrical content from songs. While modern ASR systems achieve near-human performance on clean speech, ALT presents unique challenges:

The vocal track is mixed with drums, bass, guitars, and other instruments, often at high volume. This creates a low signal-to-noise ratio where the "noise" is the music itself.

Critical Dependency: ALT performance is fundamentally dependent on the quality of an upstream Music Source Separation (MSS) module. Better vocal isolation directly leads to lower Word Error Rate (WER).

OpenAI's Whisper, pre-trained on 680,000 hours of labeled audio, has proven exceptionally robust for ALT when applied to isolated vocal stems:

- Transformer-based architecture

- Multilingual support

- Robust to various acoustic conditions

- Requires segmentation for songs > 30 seconds

Even with perfect vocal isolation, general-purpose ASR models exhibit systematic failures in musical contexts:

- Fail to transcribe non-lexical vocables ("ooh," "ah," "la")

- Often delete backing vocals

- Struggle with sung vs. spoken pronunciation

- Miss musical-specific formatting (line breaks, verses)

Beyond WER: Evaluating Musical Transcription

Standard Word Error Rate (WER) is insufficient for evaluating lyric transcription quality. The Jam-ALT benchmark introduces readability-aware metrics:

Lyric-Aware WER

Accounts for non-standard spellings and contractions common in lyrics

Case-Sensitive WER

Measures capitalization accuracy for emphasis and proper nouns

Formatting Symbol Metrics

Precision, recall, and F-score for:

- • Punctuation

- • Parentheses (backing vocals)

- • Line breaks

- • Section breaks (double line breaks)

Practical Implementation Resources

Piano Transcription

bytedance/piano_transcriptionMulti-instrument

spotify/basic-pitchLyric Transcription

openai/whisper-large-v3import torch

import librosa

from transformers import pipeline

# Load transcription model

transcriber = pipeline(

"automatic-music-transcription",

model="spotify/basic-pitch",

device=0 if torch.cuda.is_available() else -1

)

# Load audio

audio, sr = librosa.load("song.wav", sr=22050)

# Transcribe to MIDI

midi_data = transcriber(audio, sample_rate=sr)

midi_data.save("transcribed.mid")Future Research Directions

Multi-Modal Learning

Combining audio with visual information (sheet music, performance videos) to improve transcription accuracy through cross-modal learning.

Few-Shot Instrument Adaptation

Developing models that can quickly adapt to new instruments with minimal training data, addressing the annotation bottleneck.

Real-Time Transcription

Optimizing models for live performance transcription with minimal latency, enabling real-time musical collaboration with AI.

Complete Score Generation

Moving beyond note detection to generate publication-ready scores with proper notation, dynamics, articulations, and formatting.

Key Takeaways

- Polyphonic complexity: Overlapping harmonics and temporal correlations make multi-instrument transcription extremely challenging.

- Data scarcity is critical: The annotation bottleneck remains the primary obstacle to progress, especially for non-piano instruments.

- Multi-task architectures excel: Models like Onset and Frames show that dividing the problem leads to better results.

- Integration is key: AMT depends on MSS for vocal transcription, highlighting the importance of pipeline design.